Domain Generalization via Semi-supervised Meta Learning

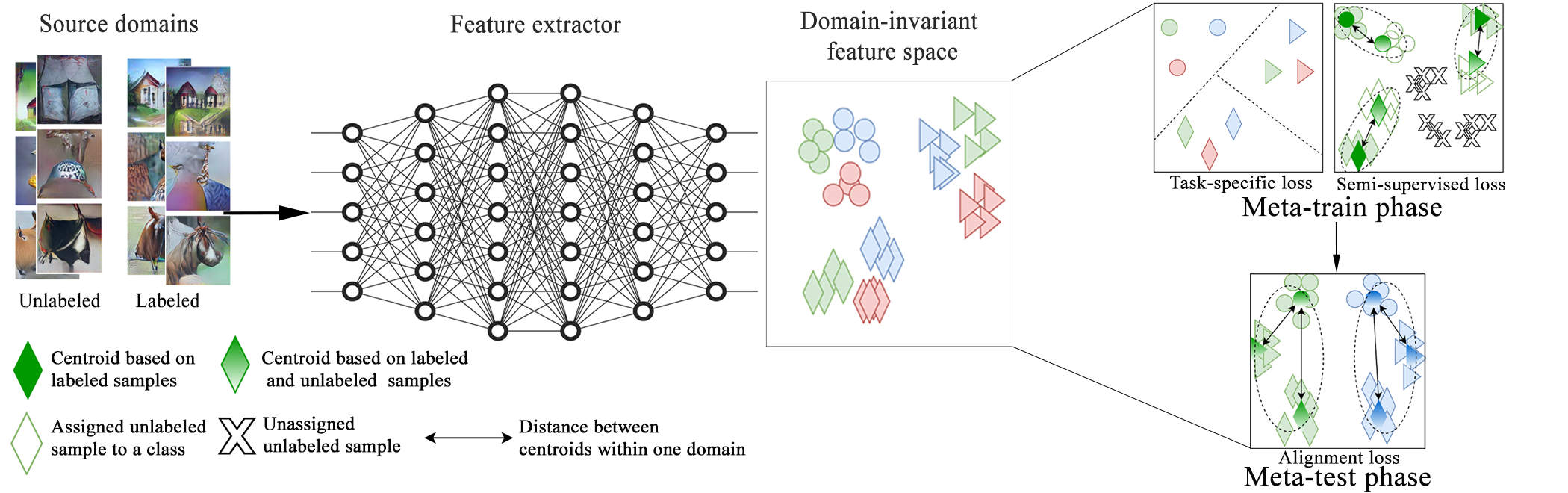

The goal of domain generalization is to learn from multiple source domains to generalize to unseen target domains under distribution discrepancy. Current state-of-the-art methods in this area are fully supervised, but for many real-world problems it is hardly possible to obtain enough labeled samples. In this paper, we propose the first method of domain generalization to leverage unlabeled samples, combining of meta learning's episodic training and semi-supervised learning, called DGSML. DGSML employs an entropy-based pseudo-labeling approach to assign labels to unlabeled samples and then utilizes a novel discrepancy loss to ensure that class centroids before and after labeling unlabeled samples are close to each other. To learn a domain-invariant representation, it also utilizes a novel alignment loss to ensure that the distance between pairs of class centroids, computed after adding the unlabeled samples, is preserved across different domains. DGSML is trained by a meta learning approach to mimic the distribution shift between the input source domains and unseen target domains. Experimental results on benchmark datasets indicate that DGSML outperforms state-of-the-art domain generalization and semi-supervised learning methods.

PDF Abstract

PACS

PACS