Inference for extreme earthquake magnitudes accounting for a time-varying measurement process

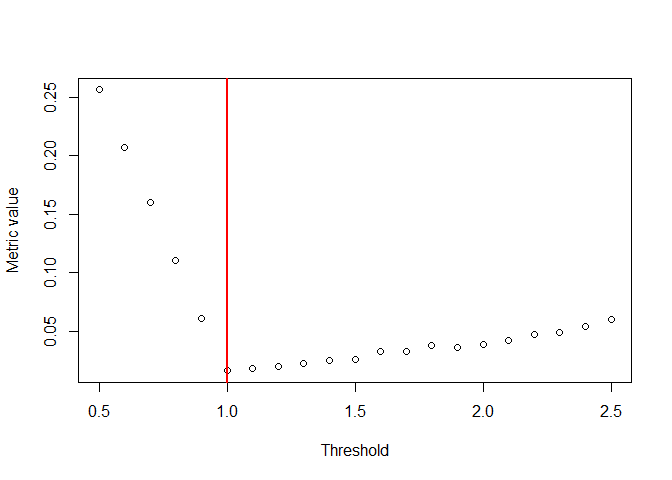

Investment in measuring a process more completely or accurately is only useful if these improvements can be utilised during modelling and inference. We consider how improvements to data quality over time can be incorporated when selecting a modelling threshold and in the subsequent inference of an extreme value analysis. Motivated by earthquake catalogues, we consider variable data quality in the form of rounded and incompletely observed data. We develop an approach to select a time-varying modelling threshold that makes best use of the available data, accounting for uncertainty in the magnitude model and for the rounding of observations. We show the benefits of the proposed approach on simulated data and apply the method to a catalogue of earthquakes induced by gas extraction in the Netherlands. This more than doubles the usable catalogue size and greatly increases the precision of high magnitude quantile estimates. This has important consequences for the design and cost of earthquake defences. For the first time, we find compelling data-driven evidence against the applicability of the Gutenberg-Richer law to these earthquakes. Furthermore, our approach to automated threshold selection appears to have much potential for generic applications of extreme value methods.

PDF Abstract