PLIP: Language-Image Pre-training for Person Representation Learning

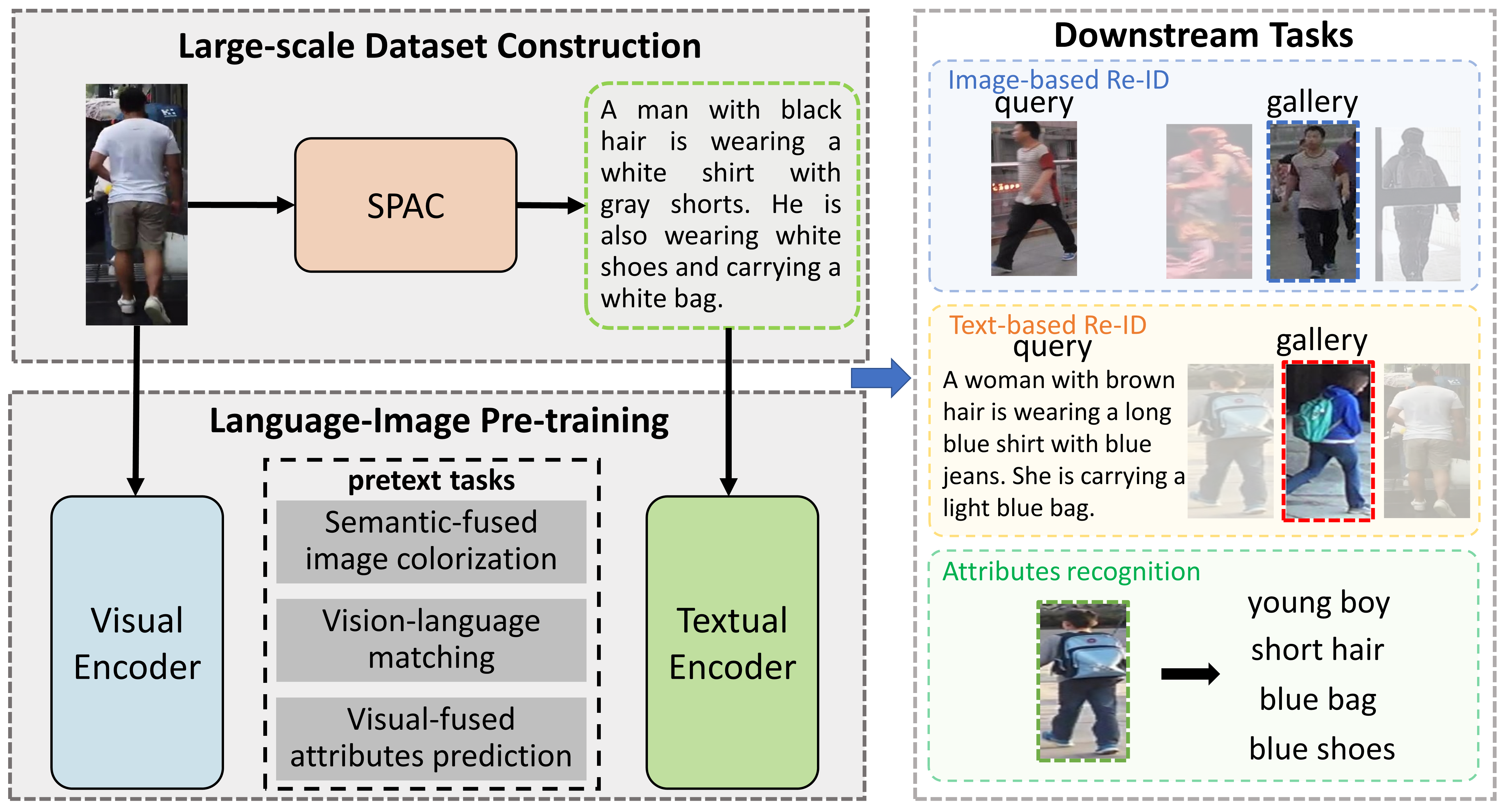

Language-image pre-training is an effective technique for learning powerful representations in general domains. However, when directly turning to person representation learning, these general pre-training methods suffer from unsatisfactory performance. The reason is that they neglect critical person-related characteristics, i.e., fine-grained attributes and identities. To address this issue, we propose a novel language-image pre-training framework for person representation learning, termed PLIP. Specifically, we elaborately design three pretext tasks: 1) Text-guided Image Colorization, aims to establish the correspondence between the person-related image regions and the fine-grained color-part textual phrases. 2) Image-guided Attributes Prediction, aims to mine fine-grained attribute information of the person body in the image; and 3) Identity-based Vision-Language Contrast, aims to correlate the cross-modal representations at the identity level rather than the instance level. Moreover, to implement our pre-train framework, we construct a large-scale person dataset with image-text pairs named SYNTH-PEDES by automatically generating textual annotations. We pre-train PLIP on SYNTH-PEDES and evaluate our models by spanning downstream person-centric tasks. PLIP not only significantly improves existing methods on all these tasks, but also shows great ability in the zero-shot and domain generalization settings. The code, dataset and weights will be released at~\url{https://github.com/Zplusdragon/PLIP}

PDF AbstractCode

Datasets

Introduced in the Paper:

SYNTH-PEDES

SYNTH-PEDES

Used in the Paper:

Market-1501

Market-1501

DukeMTMC-reID

MSMT17

DukeMTMC-reID

MSMT17

CUHK-SYSU

CUHK-SYSU

CUHK-PEDES

CUHK-PEDES

PRW

PRW

PETA

ICFG-PEDES

PETA

ICFG-PEDES

LPW

LPW