Protecting Sensory Data against Sensitive Inferences

There is growing concern about how personal data are used when users grant applications direct access to the sensors of their mobile devices. In fact, high resolution temporal data generated by motion sensors reflect directly the activities of a user and indirectly physical and demographic attributes. In this paper, we propose a feature learning architecture for mobile devices that provides flexible and negotiable privacy-preserving sensor data transmission by appropriately transforming raw sensor data. The objective is to move from the current binary setting of granting or not permission to an application, toward a model that allows users to grant each application permission over a limited range of inferences according to the provided services. The internal structure of each component of the proposed architecture can be flexibly changed and the trade-off between privacy and utility can be negotiated between the constraints of the user and the underlying application. We validated the proposed architecture in an activity recognition application using two real-world datasets, with the objective of recognizing an activity without disclosing gender as an example of private information. Results show that the proposed framework maintains the usefulness of the transformed data for activity recognition, with an average loss of only around three percentage points, while reducing the possibility of gender classification to around 50\%, the target random guess, from more than 90\% when using raw sensor data. We also present and distribute MotionSense, a new dataset for activity and attribute recognition collected from motion sensors.

PDF AbstractCode

Datasets

Introduced in the Paper:

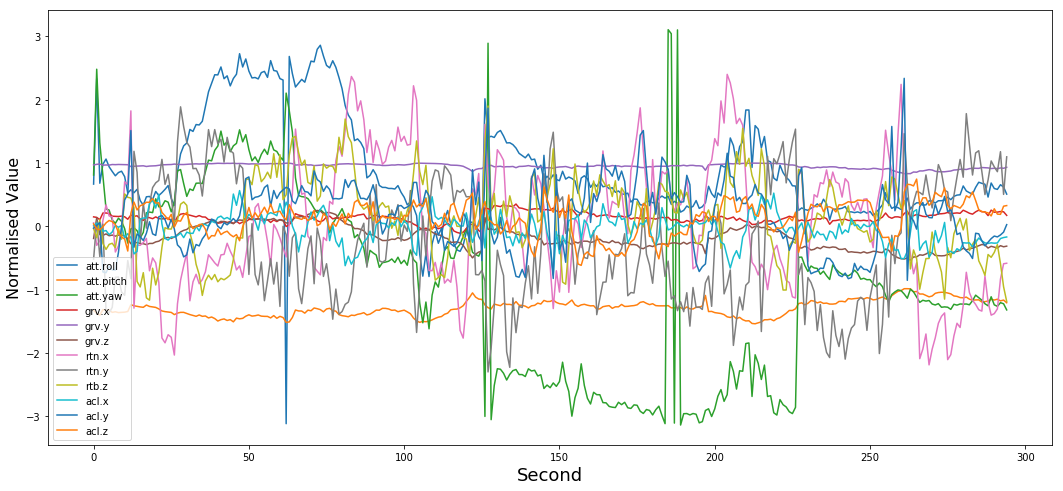

MotionSense

MotionSense