PsyEval: A Suite of Mental Health Related Tasks for Evaluating Large Language Models

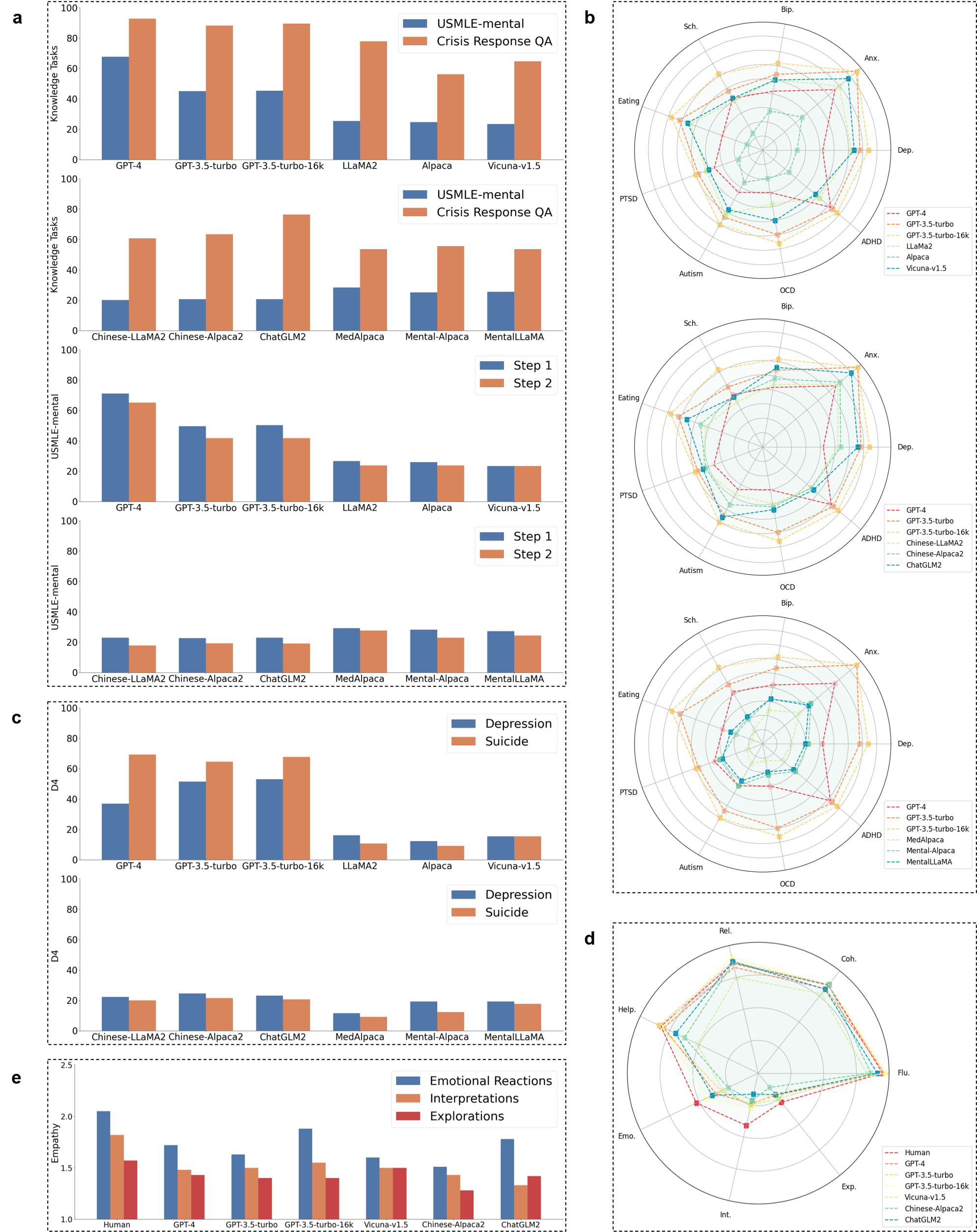

Evaluating Large Language Models (LLMs) in the mental health domain poses distinct challenged from other domains, given the subtle and highly subjective nature of symptoms that exhibit significant variability among individuals. This paper presents PsyEval, the first comprehensive suite of mental health-related tasks for evaluating LLMs. PsyEval encompasses five sub-tasks that evaluate three critical dimensions of mental health. This comprehensive framework is designed to thoroughly assess the unique challenges and intricacies of mental health-related tasks, making PsyEval a highly specialized and valuable tool for evaluating LLM performance in this domain. We evaluate twelve advanced LLMs using PsyEval. Experiment results not only demonstrate significant room for improvement in current LLMs concerning mental health but also unveil potential directions for future model optimization.

PDF Abstract