Quantifying Representation Reliability in Self-Supervised Learning Models

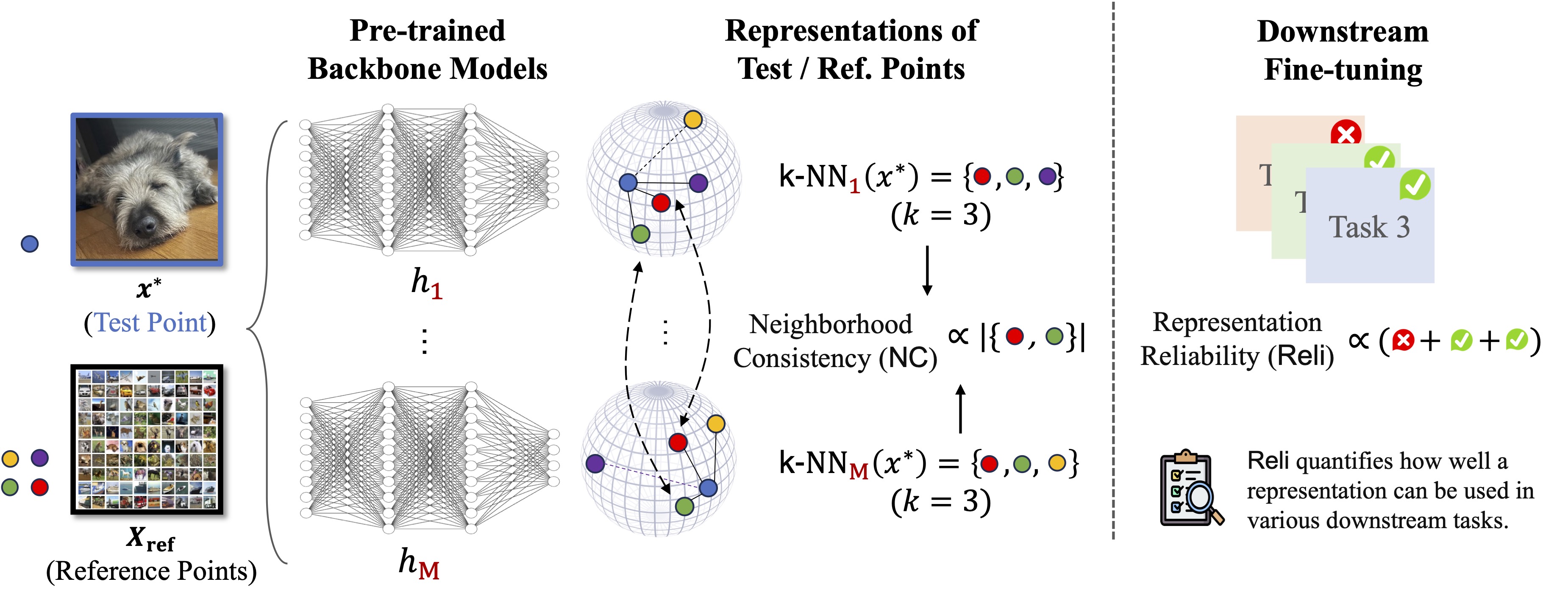

Self-supervised learning models extract general-purpose representations from data. Quantifying the reliability of these representations is crucial, as many downstream models rely on them as input for their own tasks. To this end, we introduce a formal definition of representation reliability: the representation for a given test point is considered to be reliable if the downstream models built on top of that representation can consistently generate accurate predictions for that test point. However, accessing downstream data to quantify the representation reliability is often infeasible or restricted due to privacy concerns. We propose an ensemble-based method for estimating the representation reliability without knowing the downstream tasks a priori. Our method is based on the concept of neighborhood consistency across distinct pre-trained representation spaces. The key insight is to find shared neighboring points as anchors to align these representation spaces before comparing them. We demonstrate through comprehensive numerical experiments that our method effectively captures the representation reliability with a high degree of correlation, achieving robust and favorable performance compared with baseline methods.

PDF Abstract

CIFAR-10

CIFAR-10

ImageNet

ImageNet

CIFAR-100

CIFAR-100

Tiny ImageNet

Tiny ImageNet

STL-10

STL-10