Self-Chained Image-Language Model for Video Localization and Question Answering

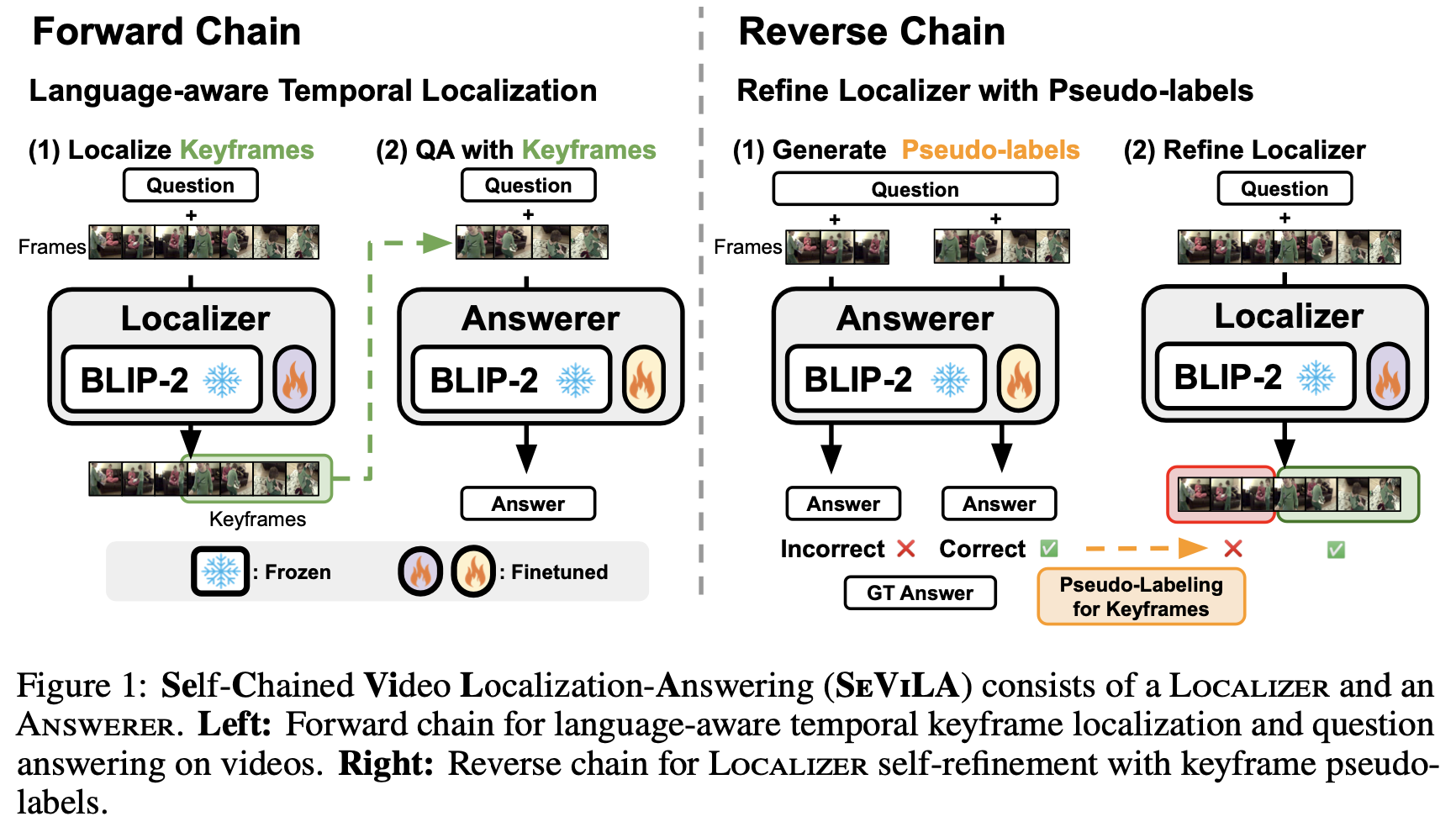

Recent studies have shown promising results on utilizing large pre-trained image-language models for video question answering. While these image-language models can efficiently bootstrap the representation learning of video-language models, they typically concatenate uniformly sampled video frames as visual inputs without explicit language-aware, temporal modeling. When only a portion of a video input is relevant to the language query, such uniform frame sampling can often lead to missing important visual cues. Although humans often find a video moment to focus on and rewind the moment to answer questions, training a query-aware video moment localizer often requires expensive annotations and high computational costs. To address this issue, we propose Self-Chained Video Localization-Answering (SeViLA), a novel framework that leverages a single image-language model (BLIP-2) to tackle both temporal keyframe localization and QA on videos. SeViLA framework consists of two modules: Localizer and Answerer, where both are parameter-efficiently fine-tuned from BLIP-2. We propose two ways of chaining these modules for cascaded inference and self-refinement. First, in the forward chain, the Localizer finds multiple language-aware keyframes in a video, which the Answerer uses to predict the answer. Second, in the reverse chain, the Answerer generates keyframe pseudo-labels to refine the Localizer, alleviating the need for expensive video moment localization annotations. Our SeViLA framework outperforms several strong baselines on 5 challenging video QA and event prediction benchmarks, and achieves the state-of-the-art in both fine-tuning (NExT-QA, STAR) and zero-shot (NExT-QA, STAR, How2QA, VLEP) settings. We also analyze the impact of Localizer, comparisons of Localizer with other temporal localization models, pre-training/self-refinement of Localizer, and varying the number of keyframes.

PDF Abstract NeurIPS 2023 PDF NeurIPS 2023 AbstractCode

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Zero-Shot Video Question Answer | EgoSchema (fullset) | SeViLA (4B) | Accuracy | 22.7 | # 13 | ||

| Zero-Shot Video Question Answer | EgoSchema (subset) | SeViLA (4B) | Accuracy | 25.7 | # 7 | ||

| Zero-Shot Video Question Answer | IntentQA | SeViLA (4B) | Accuracy | 60.9 | # 4 | ||

| Zero-Shot Video Question Answer | NExT-QA | Sevila (4B) | Accuracy | 63.6 | # 8 | ||

| Video Question Answering | NExT-QA | SeViLA | Accuracy | 73.8 | # 6 | ||

| Zero-Shot Video Question Answer | STAR Benchmark | Sevila | Accuracy | 42.2 | # 4 | ||

| Video Question Answering | STAR Benchmark | SeViLA | Average Accuracy | 64.9 | # 3 | ||

| Zero-Shot Video Question Answer | TVQA | SEVILA (no speech) | Accuracy | 38.2 | # 6 |

TVQA

TVQA

NExT-QA

NExT-QA

How2QA

How2QA

STAR Benchmark

STAR Benchmark

VLEP

VLEP