Seq-U-Net: A One-Dimensional Causal U-Net for Efficient Sequence Modelling

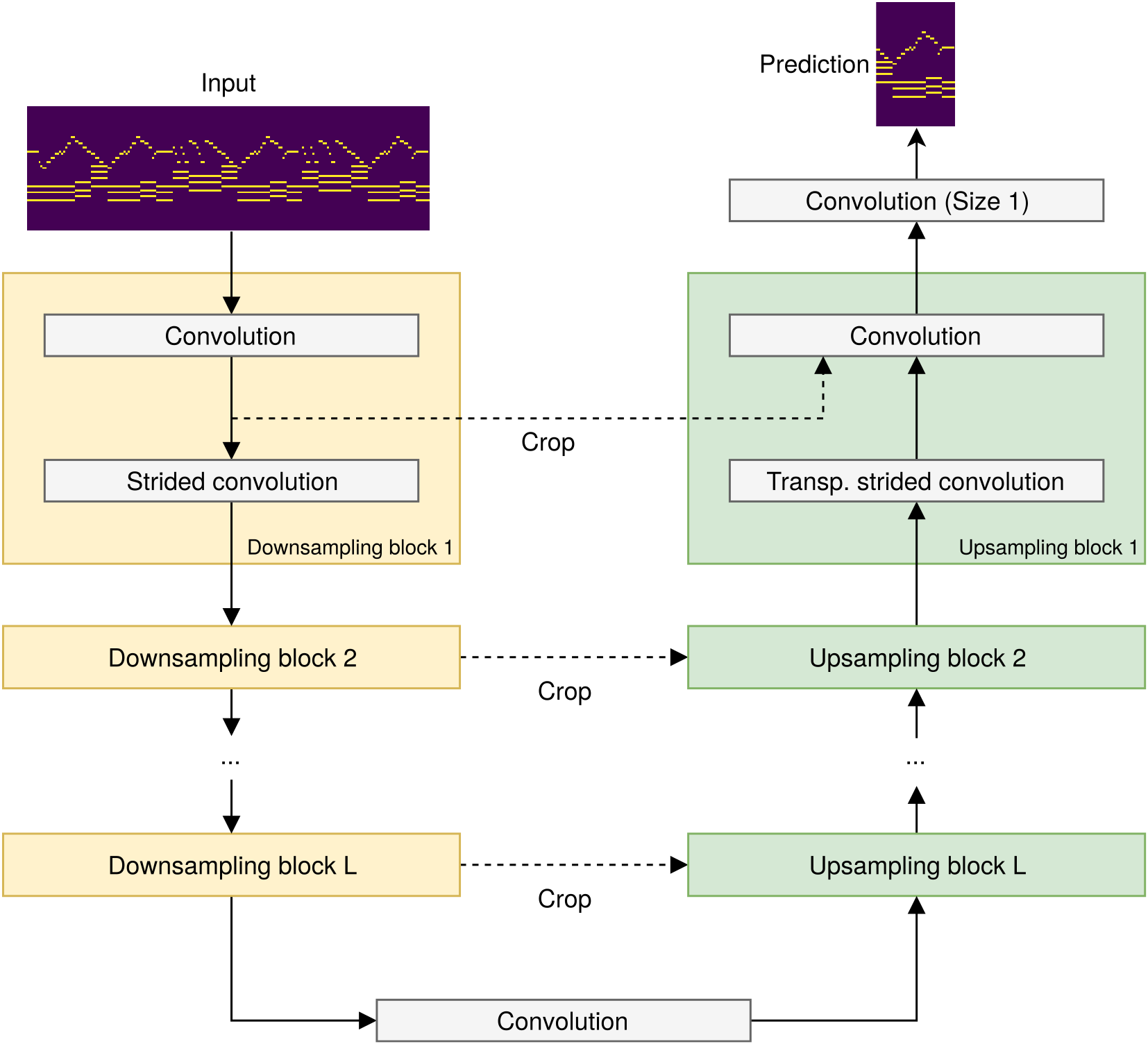

Convolutional neural networks (CNNs) with dilated filters such as the Wavenet or the Temporal Convolutional Network (TCN) have shown good results in a variety of sequence modelling tasks. However, efficiently modelling long-term dependencies in these sequences is still challenging. Although the receptive field of these models grows exponentially with the number of layers, computing the convolutions over very long sequences of features in each layer is time and memory-intensive, prohibiting the use of longer receptive fields in practice. To increase efficiency, we make use of the "slow feature" hypothesis stating that many features of interest are slowly varying over time. For this, we use a U-Net architecture that computes features at multiple time-scales and adapt it to our auto-regressive scenario by making convolutions causal. We apply our model ("Seq-U-Net") to a variety of tasks including language and audio generation. In comparison to TCN and Wavenet, our network consistently saves memory and computation time, with speed-ups for training and inference of over 4x in the audio generation experiment in particular, while achieving a comparable performance in all tasks.

PDF Abstract

Penn Treebank

Penn Treebank

JSB Chorales

JSB Chorales

MuseData

MuseData

Nottingham

Nottingham