StainNet: a fast and robust stain normalization network

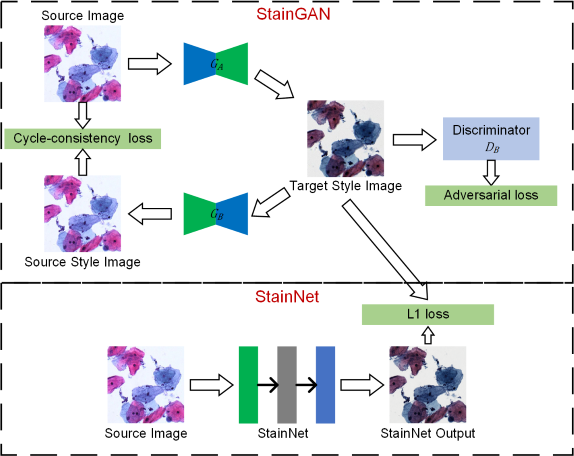

Stain normalization often refers to transferring the color distribution of the source image to that of the target image and has been widely used in biomedical image analysis. The conventional stain normalization is regarded as constructing a pixel-by-pixel color mapping model, which only depends on one reference image, and can not accurately achieve the style transformation between image datasets. In principle, this style transformation can be well solved by the deep learning-based methods due to its complicated network structure, whereas, its complicated structure results in the low computational efficiency and artifacts in the style transformation, which has restricted the practical application. Here, we use distillation learning to reduce the complexity of deep learning methods and a fast and robust network called StainNet to learn the color mapping between the source image and target image. StainNet can learn the color mapping relationship from a whole dataset and adjust the color value in a pixel-to-pixel manner. The pixel-to-pixel manner restricts the network size and avoids artifacts in the style transformation. The results on the cytopathology and histopathology datasets show that StainNet can achieve comparable performance to the deep learning-based methods. Computation results demonstrate StainNet is more than 40 times faster than StainGAN and can normalize a 100,000x100,000 whole slide image in 40 seconds.

PDF Abstract

ImageNet

ImageNet